29 01 2021

[2021-January-New]Exam Pass 100%!Braindump2go DP-200 Dumps DP-200 256 Instant Download[Q241-Q256]

2021/January Latest Braindump2go DP-200 Exam Dumps with PDF and VCE Free Updated Today! Following are some new DP-200 Real Exam Questions!

QUESTION 241

You have an enterprise-wide Azure Data Lake Storage Gen2 account. The data lake is accessible only through an Azure virtual network named VNET1.

You are building a SQL pool in Azure Synapse that will use data from the data lake.

Your company has a sales team. All the members of the sales team are in an Azure Active Directory group named Sales. POSIX controls are used to assign the Sales group access to the files in the data lake.

You plan to load data to the SQL pool every hour.

You need to ensure that the SQL pool can load the sales data from the data lake.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Create a managed identity.

B. Use the shared access signature (SAS) as the credentials for the data load process.

C. Add the managed identity to the Sales group.

D. Add your Azure Active Directory (Azure AD) account to the Sales group.

E. Create a shared access signature (SAS).

F. Use the managed identity as the credentials for the data load process.

Answer: ACD

Explanation:

A: The managed identity grants permissions to the dedicated SQL pools in the workspace.

Note: Managed identity for Azure resources is a feature of Azure Active Directory. The feature provides Azure services with an automatically managed identity in Azure AD Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/security/synapse-workspace-managed-identity

QUESTION 242

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You schedule an Azure Data Factory pipeline with a delete activity.

Does this meet the goal?

A. Yes

B. No

Answer: A

Explanation:

You can use the Delete Activity in Azure Data Factory to delete files or folders from on-premises storage stores or cloud storage stores.

Azure Blob storage is supported.

Note: You can also apply an Azure Blob storage lifecycle policy.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/delete-activity

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts?tabs=azure-portal

QUESTION 243

You plan to build a structured streaming solution in Azure Databricks. The solution will count new events in five-minute intervals and report only events that arrive during the interval. The output will be sent to a Delta Lake table.

Which output mode should you use?

A. complete

B. update

C. append

Answer: C

Explanation:

Append Mode: Only new rows appended in the result table since the last trigger are written to external storage. This is applicable only for the queries where existing rows in the Result Table are not expected to change.

Incorrect Answers:

A: Complete Mode: The entire updated result table is written to external storage. It is up to the storage connector to decide how to handle the writing of the entire table.

B: Update Mode: Only the rows that were updated in the result table since the last trigger are written to external storage. This is different from Complete Mode in that Update Mode outputs only the rows that have changed since the last trigger. If the query doesn’t contain aggregations, it is equivalent to Append mode.

Reference:

https://docs.databricks.com/getting-started/spark/streaming.html

QUESTION 244

You have a SQL pool in Azure Synapse.

You discover that some queries fail or take a long time to complete.

You need to monitor for transactions that have rolled back.

Which dynamic management view should you query?

A. sys.dm_pdw_nodes_tran_database_transactions

B. sys.dm_pdw_waits

C. sys.dm_pdw_request_steps

D. sys.dm_pdw_exec_sessions

Answer: A

Explanation:

You can use Dynamic Management Views (DMVs) to monitor your workload including investigating query execution in SQL pool.

If your queries are failing or taking a long time to proceed, you can check and monitor if you have any transactions rolling back.

Example:

–Monitor rollback

SELECT

SUM(CASE WHEN t.database_transaction_next_undo_lsn IS NOT NULL THEN 1 ELSE 0 END),

E. pdw_node_id,

nod.[type]

FROM sys.dm_pdw_nodes_tran_database_transactions t

JOIN sys.dm_pdw_nodes nod ON t.pdw_node_id = nod.pdw_node_id GROUP BY t.pdw_node_id, nod.[type]

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-manage-monitor#monitor-transaction-log-rollback

QUESTION 245

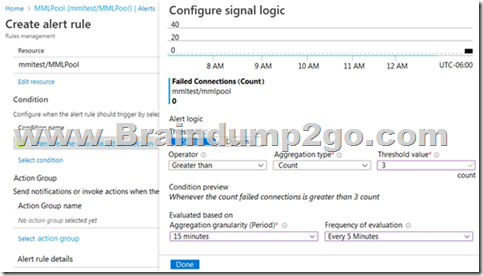

You have an alert on a SQL pool in Azure Synapse that uses the signal logic shown in the exhibit.

On the same day, failures occur at the following times:

08:01

08:03

08:04

08:06

08:11

08:16

08:19

The evaluation period starts on the hour.

At which times will alert notifications be sent?

A. 08:15 only

B. 08:10, 08:15, and 08:20

C. 08:05 and 08:10 only

D. 08:10 only

E. 08:05 only

Answer: B

Explanation:

https://docs.microsoft.com/en-us/azure/azure-sql/database/alerts-insights-configure-portal

QUESTION 246

You plan to monitor the performance of Azure Blob storage by using Azure Monitor.

You need to be notified when there is a change in the average time it takes for a storage service or API operation type to process requests.

For which two metrics should you set up alerts? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. SuccessE2ELatency

B. SuccessServerLatency

C. UsedCapacity

D. Egress

E. Ingress

Answer: AB

Explanation:

Success E2E Latency: The average end-to-end latency of successful requests made to a storage service or the specified API operation. This value includes the required processing time within Azure Storage to read the request, send the response, and receive acknowledgment of the response.

Success Server Latency: The average time used to process a successful request by Azure Storage. This value does not include the network latency specified in SuccessE2ELatency.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-scalable-app-verify-metrics

QUESTION 247

You create an Azure Databricks cluster and specify an additional library to install.

When you attempt to load the library to a notebook, the library is not found.

You need to identify the cause of the issue.

What should you review?

A. workspace logs

B. notebook logs

C. global init scripts logs

D. cluster event logs

Answer: C

Explanation:

Cluster-scoped Init Scripts: Init scripts are shell scripts that run during the startup of each cluster node before the Spark driver or worker JVM starts. Databricks customers use init scripts for various purposes such as installing custom libraries, launching background processes, or applying enterprise security

policies.

Logs for Cluster-scoped init scripts are now more consistent with Cluster Log Delivery and can be found in the same root folder as driver and executor logs for the cluster.

Reference:

https://databricks.com/blog/2018/08/30/introducing-cluster-scoped-init-scripts.html

QUESTION 248

Hotspot Question

You have a SQL pool in Azure Synapse.

You plan to load data from Azure Blob storage to a staging table. Approximately 1 million rows of data will be loaded daily. The table will be truncated before each daily load.

You need to create the staging table. The solution must minimize how long it takes to load the data to the staging table.

How should you configure the table? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: Hash

Hash-distributed tables improve query performance on large fact tables. hey can have very large numbers of rows and still achieve high performance.

Incorrect:

Round-robin tables are useful for improving loading speed.

Box 2: Clustered columnstore

When creating partitions on clustered columnstore tables, it is important to consider how many rows belong to each partition. For optimal compression and performance of clustered columnstore tables, a minimum of 1 million rows per distribution and partition is needed.

Box 3: Date

Table partitions enable you to divide your data into smaller groups of data. In most cases, table partitions are created on a date column.

Partition switching can be used to quickly remove or replace a section of a table.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-distribute

QUESTION 249

Hotspot Question

You have two Azure Storage accounts named Storage1 and Storage2. Each account contains an Azure Data Lake Storage file system. The system has files that contain data stored in the Apache Parquet format.

You need to copy folders and files from Storage1 to Storage2 by using a Data Factory copy activity. The solution must meet the following requirements:

– No transformations must be performed.

– The original folder structure must be retained.

How should you configure the copy activity? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: Parquet

For Parquet datasets, the type property of the copy activity source must be set to ParquetSource..

Box 2: PreserveHierarchy

PreserveHierarchy (default): Preserves the file hierarchy in the target folder. The relative path of the source file to the source folder is identical to the relative path of the target file to the target folder.

Incorrect Answers:

FlattenHierarchy: All files from the source folder are in the first level of the target folder. The target files have autogenerated names.

MergeFiles: Merges all files from the source folder to one file. If the file name is specified, the merged file name is the specified name. Otherwise, it’s an autogenerated file name.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/format-parquet

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

QUESTION 250

Hotspot Question

You have the following Azure Stream Analytics query.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: Yes

You can now use a new extension of Azure Stream Analytics SQL to specify the number of partitions of a stream when reshuffling the data.

The outcome is a stream that has the same partition scheme. Please see below for an example:

WITH step1 AS (SELECT * FROM [input1] PARTITION BY DeviceID INTO 10), step2 AS (SELECT * FROM [input2] PARTITION BY DeviceID INTO 10)

SELECT * INTO [output] FROM step1 PARTITION BY DeviceID UNION step2 PARTITION BY DeviceID

Note: The new extension of Azure Stream Analytics SQL includes a keyword INTO that allows you to specify the number of partitions for a stream when performing reshuffling using a PARTITION BY statement.

Box 2: Yes

When joining two streams of data explicitly repartitioned, these streams must have the same partition key and partition count.

Box 3: Yes

Streaming Units (SUs) represents the computing resources that are allocated to execute a Stream Analytics job. The higher the number of SUs, the more CPU and memory resources are allocated for your job.

In general, the best practice is to start with 6 SUs for queries that don’t use PARTITION BY.

Here there are 10 partitions, so 6×10 = 60 SUs is good.

Note: Remember, Streaming Unit (SU) count, which is the unit of scale for Azure Stream Analytics, must be adjusted so the number of physical resources available to the job can fit the partitioned flow. In general, six SUs is a good number to assign to each partition. In case there are insufficient resources assigned to the job, the system will only apply the repartition if it benefits the job.

Reference:

https://azure.microsoft.com/en-in/blog/maximize-throughput-with-repartitioning-in-azure-stream-analytics/

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-streaming-unit-consumption

QUESTION 251

Hotspot Question

You are building an Azure Stream Analytics job to identify how much time a user spends interacting with a feature on a webpage.

The job receives events based on user actions on the webpage. Each row of data represents an event.

Each event has a type of either ‘start’ or ‘end’.

You need to calculate the duration between start and end events.

How should you complete the query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: DATEDIFF

DATEDIFF function returns the count (as a signed integer value) of the specified datepart boundaries crossed between the specified startdate and enddate.

Syntax: DATEDIFF ( datepart , startdate, enddate )

Box 2: LAST

The LAST function can be used to retrieve the last event within a specific condition. In this example, the condition is an event of type Start, partitioning the search by PARTITION BY user and feature. This way, every user and feature is treated independently when searching for the Start event. LIMIT DURATION limits the search back in time to 1 hour between the End and Start events.

Example:

SELECT

[user],

feature,

DATEDIFF(

second,

LAST(Time) OVER (PARTITION BY [user], feature LIMIT DURATION(hour, 1) WHEN Event = ‘start’), Time) as duration

FROM input TIMESTAMP BY Time

WHERE

Event = ‘end’

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-stream-analytics-query-patterns

QUESTION 252

Hotspot Question

You have an Azure Stream Analytics job named ASA1.

The Diagnostic settings for ASA1 are configured to write errors to Log Analytics.

ASA1 reports an error, and the following message is sent to Log Analytics.

You need to write a Kusto query language query to identify all instances of the error and return the message field.

How should you complete the query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: DataErrorType

The DataErrorType is InputDeserializerError.InvalidData.

Box 2: Message

Retrieve the message.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/data-errors

QUESTION 253

Hotspot Question

You are processing streaming data from vehicles that pass through a toll booth.

You need to use Azure Stream Analytics to return the license plate, vehicle make, and hour the last vehicle passed during each 10-minute window.

How should you complete the query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: MAX

The first step on the query finds the maximum time stamp in 10-minute windows, that is the time stamp of the last event for that window. The second step joins the results of the first query with the original stream to find the event that match the last time stamps in each window.

Query:

WITH LastInWindow AS

(

SELECT

MAX(Time) AS LastEventTime

FROM

Input TIMESTAMP BY Time

GROUP BY

TumblingWindow(minute, 10)

)

SELECT

Input.License_plate,

Input.Make,

Input.Time

FROM

Input TIMESTAMP BY Time

INNER JOIN LastInWindow

ON DATEDIFF(minute, Input, LastInWindow) BETWEEN 0 AND 10 AND Input.Time = LastInWindow.LastEventTime

Box 2: TumblingWindow

Tumbling windows are a series of fixed-sized, non-overlapping and contiguous time intervals.

Box 3: DATEDIFF

DATEDIFF is a date-specific function that compares and returns the time difference between two DateTime fields, for more information, refer to date functions.

Reference:

https://docs.microsoft.com/en-us/stream-analytics-query/tumbling-window-azure-stream-analytics

QUESTION 254

Drag and Drop Question

You have an Azure Stream Analytics job that is a Stream Analytics project solution in Microsoft Visual Studio. The job accepts data generated by IoT devices in the JSON format.

You need to modify the job to accept data generated by the IoT devices in the Protobuf format.

Which three actions should you perform from Visual Studio in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer:

Explanation:

Step 1: Add an Azure Stream Analytics Custom Deserializer Project (.NET) project to the solution.

Create a custom deserializer

1. Open Visual Studio and select File > New > Project. Search for Stream Analytics and select Azure Stream Analytics Custom Deserializer Project (.NET). Give the project a name, like Protobuf Deserializer.

2. In Solution Explorer, right-click your Protobuf Deserializer project and select Manage NuGet Packages from the menu. Then install the Microsoft.Azure.StreamAnalytics and Google.Protobuf NuGet packages.

3. Add the MessageBodyProto class and the MessageBodyDeserializer class to your project.

4. Build the Protobuf Deserializer project.

Step 2: Add .NET deserializer code for Protobuf to the custom deserializer project Azure Stream Analytics has built-in support for three data formats: JSON, CSV, and Avro. With custom .NET deserializers, you can read data from other formats such as Protocol Buffer, Bond and other user defined formats for both cloud and edge jobs.

Step 3: Add an Azure Stream Analytics Application project to the solution Add an Azure Stream Analytics project

1. In Solution Explorer, right-click the Protobuf Deserializer solution and select Add > New Project. Under Azure Stream Analytics > Stream Analytics, choose Azure Stream Analytics Application. Name it ProtobufCloudDeserializer and select OK.

2. Right-click References under the ProtobufCloudDeserializer Azure Stream Analytics project. Under Projects, add Protobuf Deserializer. It should be automatically populated for you.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/custom-deserializer

QUESTION 255

Drag and Drop Question

You have an Azure data factory.

You need to ensure that pipeline-run data is retained for 120 days. The solution must ensure that you can query the data by using the Kusto query language.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Answer:

Explanation:

Step 1: Create an Azure Storage account that has a lifecycle policy To automate common data management tasks, Microsoft created a solution based on Azure Data Factory. The service, Data Lifecycle Management, makes frequently accessed data available and archives or purges other data according to retention policies. Teams across the company use the service to reduce storage costs, improve app performance, and comply with data retention policies.

Step 2: Create a Log Analytics workspace that has Data Retention set to 120 days. Data Factory stores pipeline-run data for only 45 days. Use Azure Monitor if you want to keep that data for a longer time. With Monitor, you can route diagnostic logs for analysis to multiple different targets, such as a Storage Account: Save your diagnostic logs to a storage account for auditing or manual inspection. You can use the diagnostic settings to specify the retention time in days.

Step 3: From Azure Portal, add a diagnostic setting.

Step 4: Send the data to a log Analytics workspace,

Event Hub: A pipeline that transfers events from services to Azure Data Explorer.

Keeping Azure Data Factory metrics and pipeline-run data.

Configure diagnostic settings and workspace.

Create or add diagnostic settings for your data factory.

1. In the portal, go to Monitor. Select Settings > Diagnostic settings.

2. Select the data factory for which you want to set a diagnostic setting.

3. If no settings exist on the selected data factory, you’re prompted to create a setting. Select Turn on diagnostics.

4. Give your setting a name, select Send to Log Analytics, and then select a workspace from Log Analytics Workspace.

5. Select Save.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/monitor-using-azure-monitor

QUESTION 256

Hotspot Question

You have an Azure data factory that has two pipelines named PipelineA and PipelineB.

PipelineA has four activities as shown in the following exhibit.

![]()

PipelineB has two activities as shown in the following exhibit.

![]()

You create an alert for the data factory that uses Failed pipeline runs metrics for both pipelines and all failure types. The metric has the following settings:

Operator: Greater than

Aggregation type: Total

Threshold value: 2

Aggregation granularity (Period): 5 minutes

Frequency of evaluation: Every 5 minutes

Data Factory monitoring records the failures shown in the following table.

For each of the following statements, select yes if the statement is true. Otherwise, select no.

NOTE: Each correct answer selection is worth one point.

Answer:

Explanation:

Box 1: No

Only one failure at this point.

Box 2: No

Only two failures within 5 minutes.

Box 3: Yes

More than two (three) failures in 5 minutes

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/alerts-insights-configure-portal

Implementing an Azure Data Solution

Resources From:

1.2021 Latest Braindump2go DP-200 Exam Dumps (PDF & VCE) Free Share:

https://www.braindump2go.com/dp-200.html

2.2021 Latest Braindump2go DP-200 PDF and DP-200 VCE Dumps Free Share:

https://drive.google.com/drive/folders/1Lr1phOaAVcbL-_R5O-DFweNoShul0W13?usp=sharing

3.2020 Free Braindump2go DP-200 Exam Questions Download:

https://www.braindump2go.com/free-online-pdf/DP-200-PDF-Dumps(241-256).pdf

Free Resources from Braindump2go,We Devoted to Helping You 100% Pass All Exams!

[January-2021]Exam Pass 100%!Braindump2go 312-50v11 Exam Dumps 312-50v11 275 Instant Download[Q45-Q66] [2021-February-New]100% Success-Braindump2go 712-50 Dumps 712-50 405 Instant Download[Q351-Q371]

Comments are currently closed.